| Quick Take : |

|

|

|

|

|

The Launch

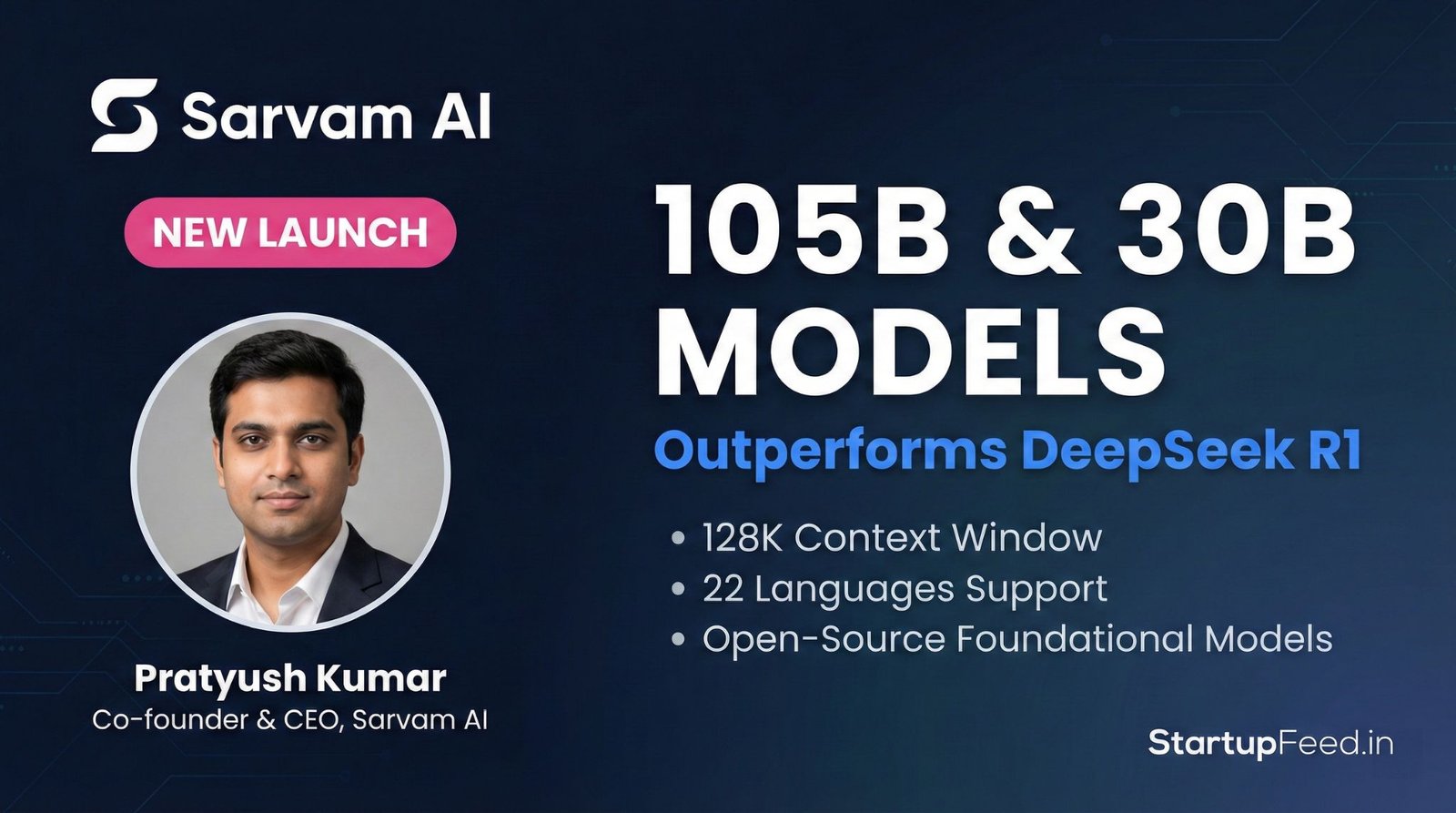

Bengaluru-based AI startup Sarvam AI has officially launched two foundational large language models — Sarvam-30B and Sarvam-105B — at the India AI Impact Summit in New Delhi on February 18, 2026, positioning the Sarvam AI 105B model as India’s most capable open-source LLM — one that, by its own benchmarks, outperforms DeepSeek R1 on reasoning tasks while costing less to run than Google’s Gemini Flash.

This signals a pivotal shift in India’s AI stack. With both models built entirely from scratch — without reliance on external datasets — Indian enterprises and government agencies now have a sovereign, cost-effective alternative to OpenAI and Anthropic APIs. Every startup burning cash on foreign LLM tokens should be taking notes.

What’s New: The Sarvam AI 105B Model and Its Sibling

Sarvam AI is one of four government-selected sovereign AI builders under India’s IndiaAI Mission — alongside Soket AI, Gnani AI, and Gan AI. The latest release marks a dramatic leap, introducing two models built on a Mixture-of-Experts (MoE) architecture:

- Sarvam-30B: Pre-trained on 16 trillion tokens. Supports a 32,000-token context window. Optimised for efficiency in reasoning and thinking benchmarks at 8K and 16K scales. Designed for population-scale accessibility including voice-based interactions on basic feature phones.

- Sarvam-105B: The flagship Sarvam AI 105B model activates 9 billion parameters and supports a 128,000-token context window — enabling longer documents and complex agentic workflows. Benchmarked against OpenAI’s GPT-OSS-120B and Alibaba’s Qwen-3-Next-80B. Claims superior performance on Indian language tasks over Google Gemini 2.5 Flash.

Both models support 22 Indian languages including Hindi, Marathi, Tamil, Telugu, Bengali, and Punjabi — a capability gap that no foreign LLM has meaningfully closed at this quality level.

Why the Sarvam AI 105B Model Matters for Indian Founders

The economics of building AI-powered products in India have been brutal. Startups integrating GPT-4 or Claude face API costs that can consume 15-30% of their unit economics. The Sarvam AI 105B model, priced below Gemini Flash while outperforming it on Indian-language benchmarks, changes that calculus fundamentally.

Three reasons this release is the most important AI launch from Bengaluru this year:

- Sovereign data privacy: All training was done on domestic data with no foreign dependencies. For BFSI, healthtech, and govtech startups handling sensitive Indian user data, this is critical compliance headroom.

- Government infrastructure backing: Sarvam AI received nearly Rs 99 crore in GPU subsidies through the IndiaAI Mission — 4,096 NVIDIA H100 SXM GPUs via Yotta Data Services — making it the mission’s biggest beneficiary so far.

- Enterprise + partner ecosystem: The company announced key integrations with Qualcomm, Bosch, and Nokia, alongside a roadmap including Sarvam for Work (enterprise coding tools) and Samvaad (conversational AI for Indian languages).

How the Sarvam AI 105B Model Works

Both models were trained entirely from scratch — not fine-tuned on existing open-source systems. CEO and co-founder Pratyush Kumar was direct: “Everything that Sarvam has built is from scratch, with no data dependency.”

The MoE architecture activates only 9 billion parameters at inference time on the 105B model, dramatically reducing compute costs compared to a dense 105B model. This is the same architectural bet that made DeepSeek competitive — Sarvam has now replicated and, on Indian-language tasks, surpassed it.

Training infrastructure leveraged resources from Yotta Data Services with technical support from Nvidia, both operating under the government-backed IndiaAI Mission’s Rs 10,000 crore fund.

Competitive Landscape: How Sarvam AI 105B Stacks Up

| Feature | Sarvam AI 105B | DeepSeek R1 | OpenAI GPT-4o |

| Indian Language Support | 22 languages (native) | Limited | Limited |

| Open Source | Yes | Yes | No |

| Context Window | 128K tokens | 64K tokens | 128K tokens |

| Built in India | Yes | No (China) | No (USA) |

| Inference Cost vs Gemini Flash | Cheaper | Comparable | Higher |

| Data Sovereignty | 100% domestic | No | No |

| Reasoning Benchmark | Beats DeepSeek R1 | Baseline | Strong |

| StartupFeed Insight |

| What the numbers say: A 105B MoE model activating 9B parameters at inference is 10x cheaper to run than a dense 105B model — Sarvam AI hasn’t just built a competitive LLM, it’s built a structurally cheap one at the same time the Indian enterprise AI market is about to hit scale. |

| What this means for you: |

| If you’re a founder: Benchmark your current LLM API spend against Sarvam’s open-source pricing before your next Series A — switching could be the single highest-ROI engineering decision of 2026. |

| If you’re an investor: Any Indian SaaS or AI startup not evaluating Sarvam as their primary LLM layer is leaving a structural cost advantage on the table — ask your portfolio companies why. |

| If you’re an employee: Sarvam for Work and Samvaad signal 200+ enterprise and product roles opening in the next 12-18 months — their LinkedIn page is worth watching. |

| Our prediction: By Q4 2026, the Sarvam AI 105B model will power at least 3 major Indian government-facing AI products, and Sarvam will announce a Series B of $75-100 Mn at a valuation above $400 Mn — driven entirely by enterprise traction, not hype. |

In Their Own Words

“Everything that Sarvam has built is from scratch, with no data dependency. The model is cheaper than Gemini Flash… while delivering better performance on many benchmarks.”

— Pratyush Kumar, CEO & Co-Founder, Sarvam AI

Company Profile: Sarvam AI

| Attribute | Details |

| Founded | July 2023 |

| Headquarters | Bengaluru, Karnataka, India |

| Co-Founders | Vivek Raghavan and Pratyush Kumar (ex-AI4Bharat) |

| Total Funding | $50 Mn+ |

| Key Investors | Lightspeed Venture Partners, Khosla Ventures, Peak XV Partners |

| Government Backing | Rs 99 Cr subsidy | 4,096 NVIDIA H100 GPUs via IndiaAI Mission |

| Partnerships | Qualcomm, Bosch, Nokia |

| Government Role | One of 4 selected sovereign AI builders in India |

What’s Next for Sarvam AI

The company has laid out an ambitious product roadmap beyond the foundational models:

- Sarvam for Work: Enterprise-grade coding-focused models and productivity tools targeting Indian IT and services companies.

- Samvaad: A conversational AI agent platform built specifically for Indian languages — targeting customer service, IVR, and citizen services markets.

- Open-source release: Both 30B and 105B models will be open-sourced, though training data and full training code specifications are still to be confirmed.

- Expanded language coverage: Continued deepening of support across all 22 scheduled Indian languages beyond the current Hindi-dominant training distribution.